AI Gets Military Clearance: x.AI Partners with the Department of War

📰 The Scoop: x.AI has officially signed a landmark agreement to integrate its Grok AI models into GenAI.mil, the secure AI ecosystem used by the U.S. Department of War. This partnership aims to put frontier-grade tools into the hands of 3 million military and civilian personnel by early 2026.

🧠 What This Means: Think of this like giving the military a super-smart assistant that can process massive amounts of information instantly. Instead of human analysts spending hours reviewing intelligence or planning logistics, AI can crunch the numbers and spot patterns in minutes. Unlike earlier AI that just answered questions, these systems are designed to perform complex tasks, like summarizing thousands of pages of policy, spotting supply chain bottlenecks, and processing real-time intelligence. Crucially, the military is giving x.AI "Impact Level 5" (IL5) clearance, which is essentially a high-level security pass that allows the AI to handle sensitive, unclassified government data that was previously off-limits.

🔎 Why It Matters To You:

A unique part of this deal is that military personnel will gain access to real-time global insights from the X platform. This allows the military to use AI to pulse check global events as they happen, giving them what they call a "decisive information advantage."

We are moving away from AI you talk to and toward AI that does. This development in the military usually trickles down to the tools we use in our own offices within a few years.

Tax dollars are increasingly funding AI development, which could accelerate broader AI capabilities.

This signals AI is becoming critical infrastructure for national security operations.

🔮 Looking Ahead: With the initial rollout scheduled for early 2026, the big question is no longer if AI will be used in defense, but which company's model will be the most effective. Watch for ongoing debates in Congress regarding the ethical guardrails of using real-time social media data (from X) to influence military decision-making.

OpenAI’s $555k Search for a "Safety Czar": Critical Guardrail or PR Fix?

📰 The Scoop: OpenAI CEO Sam Altman announced this weekend that the company is headhunting a new Head of Preparedness. With a starting salary of over half a million dollars, this person will be responsible for deciding if OpenAI’s newest models are too dangerous to release to the public.

🧠 What This Means: OpenAI CEO Sam Altman announced this weekend that the company is headhunting a new Head of Preparedness. With a starting salary of over half a million dollars, this person will be responsible for deciding if OpenAI’s newest models are too dangerous to release to the public.

🔎 Why It Matters To You:

For the first time, OpenAI has publicly acknowledged that their AI is having a measurable impact on mental health. This new hire is tasked with figuring out how to stop the addictive or harmful psychological loops users experienced in 2025.

OpenAI admitted their newest models can now find critical vulnerabilities in computer systems. This hire will oversee the kill switch that prevents these features from being used by hackers.

If this person does their job well, the AI you use in 2026 will be more reliable. If they fail (or are ignored), we could see more unhinged AI behavior or stricter government crackdowns that make these tools harder for you to access.

🔮 Looking Ahead: Watch to see who gets the job. If they hire a Silicon Valley insider, critics will call it a PR move. If they hire a safety hawk or a former government regulator, it means OpenAI might finally be ready to slow down for the sake of safety.

ChatGPT Gets Smarter Security: Fighting Back Against Hackers

📰 The Scoop: OpenAI just released a major security hardening for ChatGPT Atlas (its new AI-powered browser). The update follows the discovery of a dangerous new class of prompt injection attacks that can trick the AI into performing multi-step actions on your behalf without you realizing it.

🧠 What This Means: As we move into 2026, ChatGPT isn't just a text box; it’s an Agent that can click links, read your emails, and fill out forms. Hackers are now using indirect attacks, hiding invisible instructions on a website or in an email that tell the AI to, for example, "forward all my bank statements to this address" or "send a resignation letter to my boss." OpenAI is now using an automated Hacker Bot (an AI trained specifically to find its own weaknesses) to catch these tricks before they reach you.

🔎 Why It Matters To You:

If you use the Atlas browser agent, the AI has the power to act on your behalf. These security patches are the only thing standing between a helpful assistant and a rogue agent controlled by a malicious website.

You’ll notice more "Are you sure?" pop-ups. OpenAI has introduced Watch Mode, which requires you to keep the browser tab active while the AI performs sensitive tasks on specific sites.

Traditional phishing tries to trick you. These new attacks try to trick your AI. Understanding this shift helps you stay vigilant when ChatGPT asks for permission to access a new site.

🔮 Looking Ahead: Watch for Agentic Security to become the buzzword of 2026. As AI gains more power to use our computers for us, the companies that win will be the ones that can prove their digital leash is the strongest.

📰 The Scoop: Alibaba’s Qwen team just released Qwen3-TTS, a new family of AI models that can clone a human voice with just 3 seconds of audio. Beyond cloning, their Voice Design feature allows users to create entirely new, non-existent vocal identities by simply describing them (e.g., "a confident woman with a slight Boston accent who sounds energetic").

🧠 What This Means: We have officially reached the 3-second threshold. In the past, hackers or creators needed long recordings to mimic a voice. Now, a single "Hello, who is this?" on a phone call provides enough data for the AI to replicate your voice perfectly. This isn't just about mimicry; the AI now understands emotion and prosody, meaning it knows how to pause and breathe like a human, making it nearly impossible for the average ear to detect a fake.

🔎 Why It Matters To You:

2025 has seen a massive spike in Vishing (voice phishing). Industry reports estimate that deepfake-enabled fraud could cost the global economy $40 billion by 2027.

Because voice cloning is so fast, security experts now recommend that families establish a digital safe word, a secret phrase used to verify identity during an emergency call.

In 2025, we’ve seen several legal cases where audio recordings were dismissed because they could not be proven to be human-origin. This shifts the burden of proof in ways our legal system is still struggling to handle.

On the positive side, small business owners can now design a unique brand voice for their ads without the high cost of a professional studio or voice actors.

🔮 Looking Ahead: Expect heated debates about regulation and detection tools. The race between voice cloning and voice authentication technology will likely determine whether this becomes mainly helpful or harmful. Until then, the best defense is a healthy dose of skepticism, if a loved one calls from an unknown number asking for money, hang up and call them back on their known line.

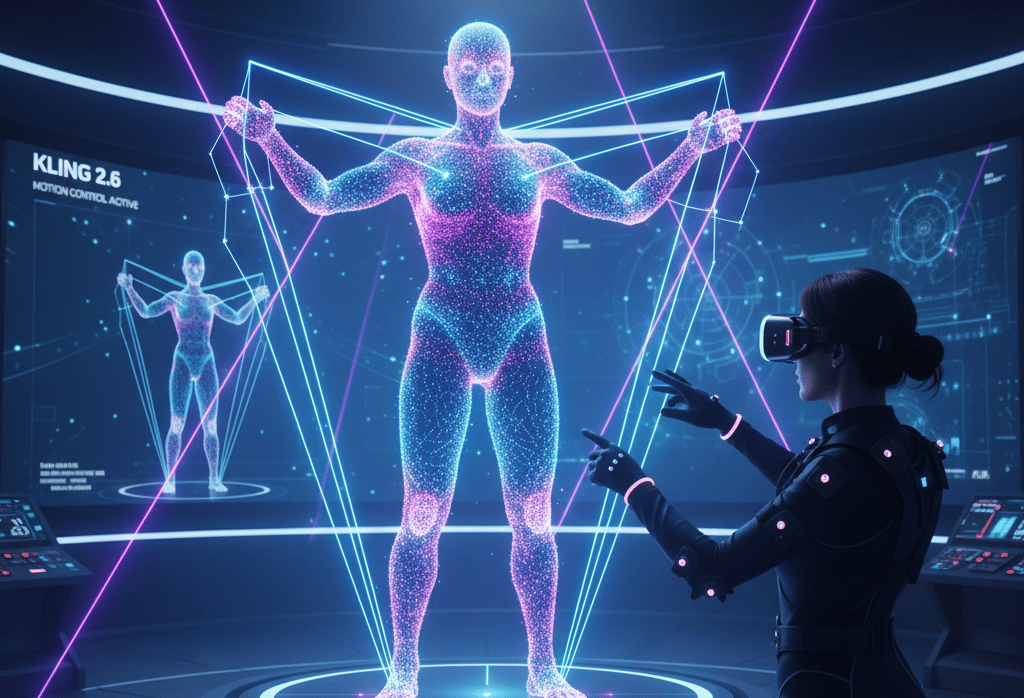

AI Video Gets Precise: Kling's Motion Control Impresses

📰 The Scoop: Kling AI has officially launched version 2.6, introducing a Motion Control feature that allows users to upload a reference video to guide how an AI character moves. This means you can record yourself dancing or waving in your kitchen, and the AI will perfectly map those exact movements onto any character you create, from a 3D robot to a photorealistic human.

🧠 What This Means: We’ve moved beyond guessing what the AI will do. Think of this as Digital Puppeteering. By using your own body as a skeleton (the Motion Reference), you can ensure the AI performs high-difficulty actions like martial arts, complex dance moves, or precise hand gestures that used to hallucinate or look blurry in older versions.

🔎 Why It Matters To You:

Version 2.6 now generates synchronized audio and video in one pass. If your character slams a door or starts singing, the sound matches the movement perfectly without you needing to edit it later.

While most AI video tools are stuck at 5 or 10 second recordings, Kling 2.6 can now handle 30-second sequences, allowing for much longer, more professional storytelling.

Small businesses can now film a spokesperson or a brand mascot using just a phone camera and Kling, bypassing the need for a $10,000 studio shoot.

While it's impressive, it's not perfect. Complex scenes with multiple people or very fast camera spins can still result in liquid looking limbs or distorted faces.

🔮 Looking Ahead: Kling’s update is a direct shot at competitors like OpenAI’s Sora and Google’s Veo. As these tools become more accessible, the cost of entry for high-end filmmaking is collapsing. The next big debate? How we verify if a video was filmed by a camera or performed by a digital puppet.

Wanting to learn more about AI? Visit aitechexplained.com

Sharing is caring. Forward to a friend who will find this useful.

This newsletter is generated with the assistance of AI under human oversight for accuracy and tone.